Now that everyone is raving about Amazon's new TeeVee airing of Fallout, a pertinent question arises: how primitive and industrial were PCs in 1997, when the Fallout OG game was released? After all, we are talking glass-tube CRT screens, ribbon IDE cables, processing power lower than a bank card, and barely anything better than the monochromatic, diegetic Pip-Boy list computer that appears in the game itself

. In 1997, the The minimum operating requirements for "Fallout" are interesting: 16MB (yes, MB, not GB) of RAM, an Intel Pentium CPU, 1MB of video memory, plus a 640 x 480 pixel screen and a glorious palette of 256 colors.

Of course, no self-respecting proto-tech blogger of the day would be satisfied with mere minimum specs. So what did the ultimate gaming rig look like when "Fallout" was released?

They must have wanted an Intel Pentium II, which was no better than an AMD K6 at the time. They probably would have been looking at a powerful 300 MHz model.

At the time, Anandtech found the PII to be more than twice as fast as the K6 in its customary Quake II time demo, producing buttery smooth results of 66 fps at 640 x 480 compared to the K6's slow 25 fps.

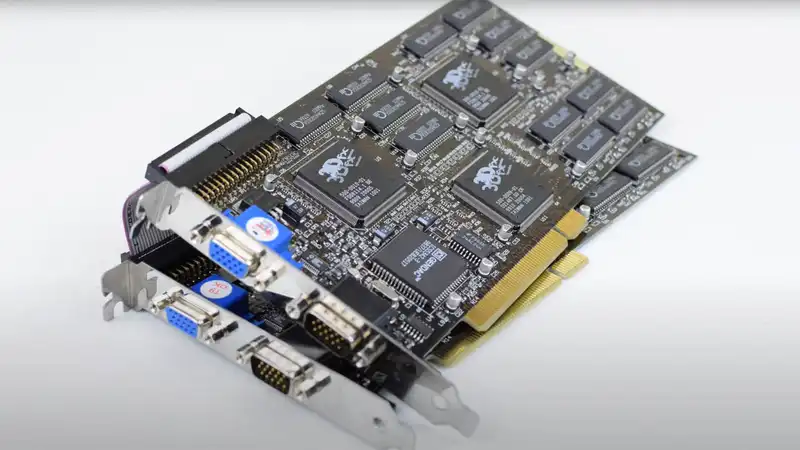

If this choice was an easy one, choosing a video solution back then was even more difficult. At the time, dedicated graphics for the PC was in its infancy, and the market was a veritable Wild West of competing standards, formats, and form factors.

For starters, the choice was between an old-fashioned PCI card or the new-fangled AGP (Accelerated Graphics Port). Yes, a dedicated graphics port.

We also had to decide if we wanted a card that could render both 2D and 3D, or if we wanted to split that role between different boards. Coincidentally, the best results for 3D rendering in Anandtech's late 1997 tests were achieved by the Creative Labs 3D Blaster and its 3D-only chip 3Dfx Voodoo 2, but Voodoo 2 was not released until February 1998.

It beat out the next best board with Nvidia's new Riva 128 chip by hitting 66 fps in the Quake II timed demo. Other graphics chipset choices at the time included ATI's 3D Rage Pro, PowerVR PCX2, Rendition Verite V2100, 3DLabs Permedia 2, and Matrox Millennium II. There was certainly no shortage of choices.

Of course, the ultimate solution at the time was to run two Voodoo 2s in SLI or Scan-Line Interleave, which not only doubled rendering throughput, but also dizzyingly increased the maximum supported resolution to 1,024 x 768

Now, the maximum resolution supported is 1,024 x 768.

Now, just to refrain from any resentful comments, OG Fallout was of course not a 3D game. At the time of development, a 3D engine was apparently considered, but the technology was not capable of capturing the same level of detail and artistic atmosphere as developer Tim Kane, who worked on it alone for six months and then gradually enlisted the help of his colleagues at Interplay.

Instead, "Fallout" was developed using diagonal projection, giving some 3D feel to what was ultimately a 2D engine. The rest, we must speak of as history.

As to how it performed on the hardware of the time, it is quite difficult to dig up from the historical record. Also, frame rates do not apply to 2D projection titles such as "Fallout" in the same way they do to full 3D games.

Therefore, it is not possible to definitively prove how the various video chipsets of the time compared when running "Fallout". However, I will defer that to the comments below.

Incidentally, my first graphics card was an Nvidia Riva TNT2, which did not appear until 1999 and was made on TSMC 220nm silicon. Other rigs would have probably had 32MB of RAM, a 5GB IDE hard drive, and a 17-inch CRT with a ridiculous 1,600 x 1,200 size and a Sony Trinitron tube with a flat vertical axis. Those were truly the days.

Comments