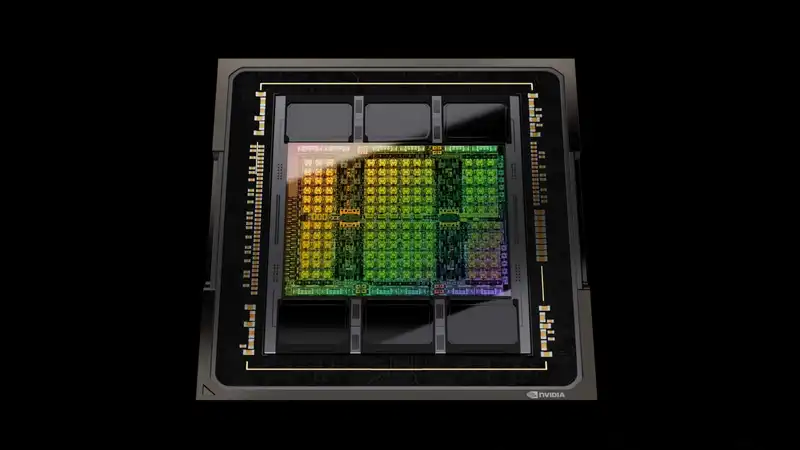

Remember when we reported market data that Meta had purchased 150,000 of Nvidia's H100AI chips?

Most estimates put the price of the Nvidia H100 GPU between $20,000 and $40,000. Meta and Microsoft are Nvidia's two largest customers for the H100.

However, basic calculations based on Nvidia's reported AI revenues and H100 shipments show that Meta should not pay very much, if anything less than the lower end of that price range. At the very least, there is no doubt that Meta is spending billions of dollars on Nvidia's silicon.

Meta also revealed details about how the H100 is implemented (via ComputerBase). Apparently, it is embedded in 24,576 clusters used to train language models; a low estimate of the unit cost of H100 would be the equivalent of $480 million per cluster Meta offers insight into two slightly different versions of these clusters.

To quote ComputerBase, one "relies on RoCE (Remote Direct Memory Access over Converged Ethernet) based on Arista 7800, Wedge400, and Minipack2 OCP components and The other relies on Nvidia's Quantum-2 InfiniBand solution. Both clusters communicate via a 400 Gbps high-speed interface"

.

Frankly, we couldn't tell the difference between Arista 7800's butt and Quantum-2 InfiniBand's elbow. In other words, building AI training hardware requires more hardware than just buying a bunch of GPUs; connecting GPUs also seems to be a major technical challenge.

What is striking, however, is the 350,000 figure: it was estimated that Meta would buy 150,000 H100s in 2023, and hitting this 350,000 goal would mean buying another 200,000 this year.

According to figures released by research firm Omnia late last year, Meta's only rival in terms of H100 purchases was Microsoft. Even Google was believed to have purchased only 50,000 units in 2023. Therefore, to approach Meta's purchase volume, it would need to increase its purchases by a truly spectacular amount.

For reference, Omnia predicts that the overall market for these AI GPUs will be twice as large in 2027 as it was in 2023. In other words, Meta's purchase of a large number of H100s in 2023, and then again this year, is well in line with the available data.

What this means for us is anyone's guess. Everything from the impact this huge demand for AI GPUs will have on the availability of modest graphics rendering chips for PC gaming to the details of how future AI will progress over the next year or two is difficult to predict.

As if these numbers weren't big enough, Omdia predicts that Nvidia's revenue on these big data GPUs will double its current revenue by 2027. Not all of them will be used for large-scale language models or similar AI applications, but many of them will be.

It is interesting that Omdia is so bullish about Nvidia's prospects in this market, despite the fact that many of Nvidia's largest customers in this market are actually planning to build their own AI chips. In fact, Google and Amazon have already done so, which is probably why they are not buying as many Nvidia chips as Microsoft and Meta.

We can also expect strong competition from AMD's MI300 GPU and its successors, and startups such as Tenstorrent, led by Jim Keller, one of the most highly regarded chip architects on the planet, are also looking to get in.

But one thing is certain: these numbers make the game graphics market look rather puny. We have created a monster.

]

Comments