Imagine having the ability to recall memories with near-perfect accuracy, and as we move toward an AI-centric future, that dream is likely to come true. Researchers are now using stable diffusion methods to read human brain waves and reconstruct fairly accurate, high-resolution images.

Yu Takagi and Shinji Nishimoto of Osaka University's Graduate School of Frontier Biosciences recently showed that by reading human brain activity obtained from functional magnetic resonance imaging (fMRI), "without requiring training or fine-tuning of complex deep generative models," high-resolution images (open in new tab) using latent diffusion models ( PDF), I wrote a paper outlining that it is possible to reconstruct (via Vice (opens in new tab))

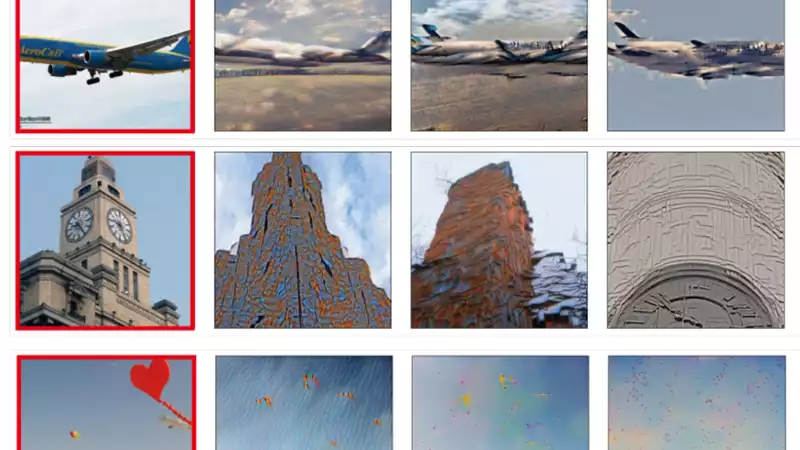

The results of this study are hard to believe, given that we do not fully understand how translation occurs in the brain. The fact that Takagi and Nishimoto were able to pull high-resolution images from latent space using human brain waves is remarkable.

Now, by high resolution, we mean 512 x 512 pixels. Still, it is much better than the 256p that our competitors managed, and the "semantic fidelity" is much higher. In other words, the translation is actually vaguely recognizable and representative of the original image participants were shown.

In previous studies, the researchers explain, "we trained and in some cases fine-tuned GAN-like generative models using the same datasets used in the fMRI experiments. Such generative models are challenging not only because they are tricky to work with, but also because the training material is ultra-limited. The Osaka researchers appear to have been able to work around these limitations with Stable Diffusion.

We've all seen the episode of Black Mirror, "The Entire History of You" (opens in a new tab), a terrifying look at a future where implants record our daily lives so that they can later closely monitor every moment and ruin our relationships It is a frightening portrayal of a future in which implants will record our daily lives and later be able to closely monitor every moment and ruin relationships.

But before relegating the idea of using AI for visual recall to the dystopian segment of your brain hole, consider its practical uses! The day may come when people who don't speak or paralyzed people who can't take pictures of something to show later will be able to show exactly what they are thinking by passing brain waves through an artificial intelligence.

As one of the first (if not the first) studies to use the diffusion model in this way, it may at least help paint such algorithms in a better light. Stable diffusion has come under fire recently, at least in the arts. For some diffusion models are scraping the Internet (open in new tab) and reposting the top page of ArtStation, used only for the financial benefit of lazy officials.

However, if the data is used correctly and the training of these models is facilitated to benefit the field of accessibility, allowing people to accurately represent their own inner worlds and communicate in new ways, then I am all for it.

Comments