At Nvidia's GTC keynote today, CEO Jen-Hsun Huang announced the upcoming rollout of a collection of large-scale language model (LLM) frameworks known as Nvidia AI Foundations (opens in new tab).

Jen Husun is so confident in the AI Foundations package that he calls it the "TSMC of custom large-scale language models." However, I think the same is true of Huang's dire comment that AI is having an "iPhone moment."

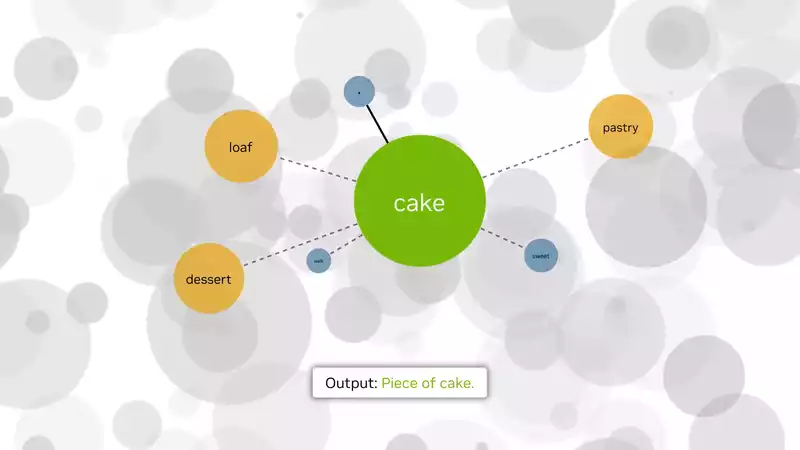

The Foundations package includes the Picasso and BioNeMo services, which serve the media and medical industries, respectively, and NeMo, a framework for companies looking to integrate large-scale language models into their workflow. [NeMo is "for building custom language, intertextual generative models" that can inform what Nvidia calls "intelligent applications (opens in new tab)."

A little something called P-Tuning allows companies to train their own custom language models to create more relevant branded content, compose emails with a personalized writing style, or summarize financial documents.

Hopefully, it will relieve the burden on the everyman and stop the bosses from yelling, "Put him on a chatbot."

NeMo's language model has 8 billion, 43 billion, and 530 billion parameter versions to choose from, with different tiers of widely varying power levels.

Incidentally, Chat GPT's original GPT-3 runs on 175 billion parameters, and while OpenAI does not disclose how many parameters GPT-4 (open in new tab) currently runs on, AX Semantics (open in new tab) estimates about 1 trillion. They estimate it to be about 1 trillion.

In short, GPT-4 will not be a direct competitor to ChatGPT and may not have the same depth of parameters, but as a framework for designing large-scale language models, GPT-4 will certainly change the face of every industry it touches. That much is certain.

Comments