One of the most amazing developments in chip manufacturing in recent years has been chiplets and the stacking of those chiplets. The possibilities are endless; AMD showed at CES 2023 how to improve gaming frame rates by stacking more cache into the processor with the Ryzen 7000X3D CPU (opens in new tab), and for data center professionals, something equally impressive They had prepared.

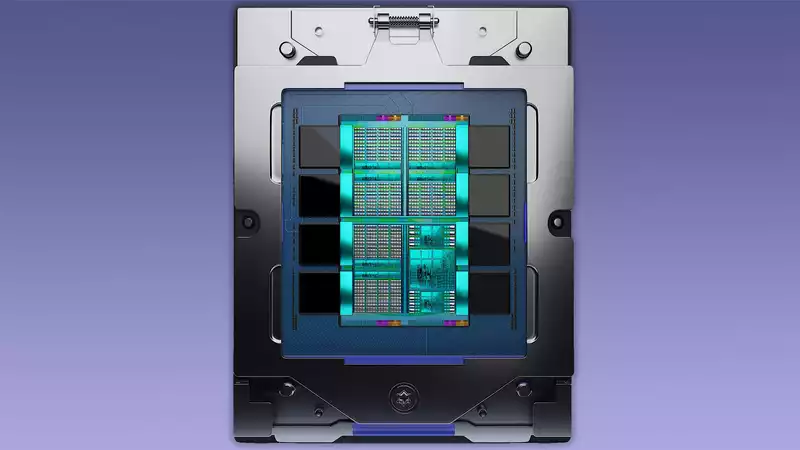

AMD is using 3D chip stack technology to integrate the CPU and GPU into one giant chip: the AMD Instinct MI300.

Not only does this chip have both a CPU and a GPU. AMD has also been producing APUs for years, basically chips with both a CPU and a GPU under one roof. In fact, AMD defines the MI300 as an APU.

But what's cool about AMD's latest APU is its size. It's huge.

It is a data center accelerator with 146 billion transistors. That's nearly twice the size of Nvidia's AD102 GPU (the GPU in the RTX4090) at 76.3 billion. And this is huge. In fact, AMD CEO Lisa Su held up an MI300 chip on stage at CES, but either she has shrunk or this guy is the size of a decent sized stroop wafer. The cooling of this chip must be enormous.

This chip has a GPU derived from AMD's CDNA 3 architecture. This is a version of the CDNA for gaming and CDNA for compute, a graphics architecture built solely for compute performance. This GPU is packaged with a Zen 4 CPU and 128GB of HBM3 memory.MI300 has nine 5nm chiplets on top of four 6nm chiplets. This means that there are 9 CPU or GPU chiplets (likely 6 GPU chiplets and 3 CPU chiplets) on top of a 4-piece base die. And there is memory around the periphery. So far, no details on the actual configuration have been revealed, but we do know that everything is connected by the 4th generation Infinity interconnect architecture

.

The idea is that having everything in one package and fewer hoops to jump through for data to travel will result in a very efficient product compared to one that makes more calls to off-chip memory and slows down the entire process. Since this level of computing is all about bandwidth and efficiency, this type of approach makes a lot of sense. This is a similar principle to the Infinity Cache in AMD's RDNA 2 and 3 GPUs. Having more data close at hand reduces the need to search far and wide for the right data and keeps frame rates high.

However, there are several reasons why there is no MI300-style accelerator for gaming: for one thing, a reasonable budget for most gamers would not be able to afford the amount AMD is trying to charge for the MI300. It will be quite a bit more. Likewise, no one has figured out how to program a game to show multiple compute chips on a gaming GPU as a single entity, unless there is some special coding. We went through that with SLI and CrossFire and it didn't work. [But the MI300 is very big and very powerful; AMD touts an almost 8X improvement in AI performance over its own Instinct MI250X accelerator. MI250X is also a multi-chiplet monster with 58B transistors, but once that staggering impressive amount now seems a bit small. Basically, it was no easy task to beat this chip, but the MI300 has done it, and is also five times more efficient in AMD's production.

The MI300 will be available later this year, so it is still a ways off. However, it's more for marveling at than for actually splashing out the big bucks to buy one. Unless you work in a data center or are involved in AI and can afford the big bucks.

Comments